Taking the AI Pulse

Informal poll reveals alumni attitudes about and experiences with emerging technology

by MaryAlice Bitts-Jackson

As we planned this article in early 2024, dozens of scientists signed an agreement against artificial intelligence (AI) bioweapons while touting AI’s benefits to bioscience. Large language models (LLMs) such as ChatGPT and Google’s Bard/Gemini generated increasingly sophisticated content but occasionally declined simple tasks. AI bungled a legal brief and aced mammogram interpretation. The Sports Illustrated fallout continued. Long-deceased rock stars released new material. Deepfake robocalls and videos confounded voters. OpenAI’s valuation tripled.

Welcome to the AI revolution. Rest assured: More eye-opening developments will unspool before the ink on this issue is dry. Seismic gains are predicted in medicine, education, business, climate science and space exploration, among other fields, along with revolutions in how we work, learn, innovate, problem-solve and interact. If that excites or terrifies you—or both—you’re in excellent company. (So too if you aren’t quite sure.)

Our unscientific poll revealed a spectrum of AI opinions, and our findings were analyzed with help from AI

The basics

We asked, and 191 readers responded.

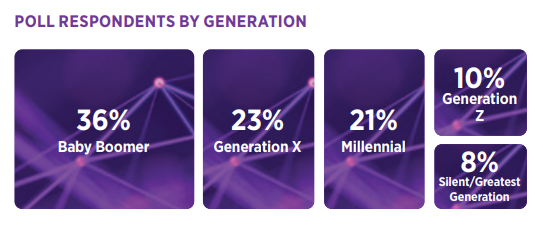

We published our casual poll in Dickinson’s January alumni newsletter. To our delight, nearly 200 Dickinsonians completed it. Forty-three are retired/do not currently work, and 40—the second-largest group, organized by profession—work in health care. Baby boomers claim the biggest generational cohort, followed by Generation X. Most use AI-powered maps and voice assistants, and 12% deploy LLMs. Only a handful use AI for automated predictive modeling, coding or driverless transportation. The average respondent does not use AI at work (this includes retirees).

What excites you?

“I’m focused on utilizing AI to automate more and more of my mundane work and figuring out how it can be utilized with some of my bigger-picture items.” – Brittany Zehr ’07

For all but the youngest respondents, increased productivity/efficiency was the top answer, followed by improved decision-making and analysis, greater job satisfaction/freeing workers from routine tasks, enhanced innovation/ creativity, personalization and access. (The exception: Gen Z respondents named greater job satisfaction as AI’s No. 1 benefit.)

“I use it several times a week, including for content creation,” a Gen Z auditor said.

All but 40 Dickinsonians pointed to at least one potential AI benefit, and many named several.

Sixty percent of “a little concerned” respondents are enthusiastic about AI’s potential to usher in both greater job satisfaction and improved decision-making. Many of these expect to see increased productivity/efficiency as well. Dickinsonians in business/finance are especially encouraged by AI’s potential to increase productivity and improve decision-making. Several sagely noted the need to balance technical savvy with soft skills.

How do you feel about rapid developments?

The No. 1 answer is “a little concerned, but I believe we’re on the right track,” with “very concerned” coming in second.

That top answer held true for all generational groups but the oldest, which placed both “a little concerned” and “unsure” in the No. 1 spot.

Dara Pappas ’17 is among the prudently enthusiastic. With an eye out for potential issues, she leads market research for world-class corporations, and she sees transformative ripples across her industry. “AI is a vital layer in our research,” she says, noting AI’s ability to deftly analyze huge data sets and propose novel approaches.

What concerns you?

“I’m concerned that people will get in the habit of accepting AI without questions, or without understanding,” a senior counsel for national security wrote. An exec who occasionally uses AI at work took that idea further: “Our society seems to lack the healthy skepticism necessary to process information provided by AI.”

“I’m concerned that people will get in the habit of accepting AI without questions, or without understanding,” a senior counsel for national security wrote. An exec who occasionally uses AI at work took that idea further: “Our society seems to lack the healthy skepticism necessary to process information provided by AI.”

Of the options given, Dickinsonians were most concerned about existential risks, such as the possibility of AI surpassing human capabilities. Bias/discrimination came next, followed by general job displacement. Only nine worried about losing their own jobs to AI. Forty-eight mentioned effects on human creativity/innovation; an art teacher identified “diminishing human creativity and originality” as an existential threat.

More than 20 insightful Dickinsonians used a write-in/other option to express worries about disinformation and misinformation.

“It’s getting difficult to tell the difference from reality and deep fakes,” a director of market and business intelligence observed. “People believing everything they hear or see online will only get worse … as AI generates fake pictures and videos that look believable,” an education pro added.

Personal security was another significant write-in answer. “Who gets access to the information AI gathers?” one respondent asked.

Additional concerns included risks to national and international security and to international relations and commerce; ethics; AI’s environmental costs and insufficient regulations. Two alumnae questioned AI’s effects on us. “I don’t want to hand over my thinking to machines,” said Caly McCarthy ’17, a marketing and communications coordinator advocating for wondering as a key aspect of human experience. McCarthy also noted the value of writing as a means of thinking ideas through and worried about ideas AI could overlook.

Tina Malgiolio Mastrangelo ’88, a retired advertising art director, added concerns about interpersonal interaction and wellness to the mix: “As computers take over more and more of our businesses and the workforce dwindles, it is easy (and scary) to imagine a world where human contact and creativity slowly disappear.”

A nuanced view

Still, it’s important to remember: The No. 1 response to “How do you feel about AI?” is “a little concerned, but I believe we’re on the right track,” and the vast majority of Dickinsonians identify at least one AI benefit. And the latter includes those with substantial AI concerns.

A photographer who uses AI daily to touch up images summed up the complexity. “Creatives rightly worry about their work being appropriated for AI without compensation or attribution, and yet AI has positively impacted photography,” they wrote.

Seven alumni experts, interviewed during the research stage of this article’s development, echo this nuanced approach. Each is deeply immersed in AI. Overwhelmingly, they’re galvanized by the good AI can do—while also stressing that human oversight, ethical guidelines and creativity are essential. (Visit dson.co/aialumni for our new series on alumni in AI.)

The key to such balanced human-AI collaboration? Education, a retired ad exec offered, adding: “The question is whether education—critical thinking and worldview—will be enough.”

Stephanie Teeuwen ’20, specialist in data policy and AI at the World Economic Forum, says the outcome looks positive for the well-prepared. “While no one can predict the future, I believe that the types of skills provided by a liberal-arts and science education will remain critical, including creativity and critical thinking,” she wrote, “and I am convinced that [these skills and mindsets] prepare students well to deal with this uncertain future.”

So take heart, Dickinsonians, as you buckle up for this AI roller coaster. We can’t see all the soaring ascents before us—nor any freefalls or fake-outs in wait. Be we can proceed thoughtfully and nimbly, confident in our big-picture wisdom. Because—well, that’s just what we do.

Read more from the spring 2024 issue of Dickinson Magazine.

TAKE THE NEXT STEPS

Published June 6, 2024